You wouldn’t write your username and passwords on a postcard and mail it for the world to see, so why are you doing it online? Every time you log in to any service that uses a plain HTTP connection that’s essentially what you’re doing.

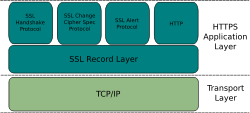

There is a better way, the secure version of HTTP—HTTPS. HTTPS has been around nearly as long as the Web, but it’s primarily used by sites that handle money. HTTPS is the combination of HTTP and TLS. Transport Layer Security (TLS) and its predecessor, Secure Sockets Layer (SSL), are cryptographic protocols that provide communications security over the Internet.

Web security got a shot in the arm last year when the FireSheep network sniffing tool made it easy for anyone to capture your current session’s log-in cookie insecure networks. That prompted a number of large sites to begin offering encrypted versions of their services via HTTPS connections. So the Web is clearly moving toward more HTTPS connections; why not just make everything HTTPS?

HTTPS is more secure, so why isn’t the Web using it? gives some interesting background on HTTPS. There are some practical issues most Web developers are probably aware of.

The real problem is that with HTTPS you lose the ability to cache. For sites that don’t have any reason to encrypt anything (you never log in and just see public information) the overhead and loss of caching that comes with HTTPS just doesn’t make sense. The most content on this site for example don’t have any reason to encrypt anything.

HTTPS SSL initial key exchange also adds to the latency, so HTTPS-only Web would, with today’s technology, be slower. The fact that more and more websites are adding support of HTTPS shows that users do value security over speed, so long as the speed difference is minimal.

The cost of operations for HTTPS site is higher than normal HTTP: you need certificated that cost money and more server resource. There is cost of secure certificates, but obviously that’s not as much of an issue with large Web services that have millions of dollars. The certificate cost can be a showstopper for some smaller low budget sites.

Perhaps the main reason most of us are not using HTTPS to serve our websites is simply that it doesn’t work well with virtual hosts. There is a way to make virtual hosting and HTTPS work together (the TLS Extensions protocol Server Name Indication (SNI)) but so far, it’s only partially implemented.

In the end there is no real technical reason the whole Web couldn’t use HTTPS. There are practical reasons why it isn’t just yet happening today.

62 Comments

Tomi Engdahl says:

In-depth: How CloudFlare promises SSL security—without the key

CEO shares technical details about changing the way encrypted sessions operate.

http://arstechnica.com/information-technology/2014/09/in-depth-how-cloudflares-new-web-service-promises-security-without-the-key/

Content delivery network and Web security company CloudFlare has made a name for itself by fending off denial-of-service attacks against its customers large and small. Today, it’s launching a new service aimed at winning over the most paranoid of corporate customers. The service is a first step toward doing for network security what Amazon Web Services and other public cloud services have done for application services—replacing on-premises hardware with virtualized services spread across the Internet.

Called Keyless SSL, the new service allows organizations to use CloudFlare’s network of 28 data centers around the world to defend against distributed denial of service attacks on their websites without having to turn over private encryption keys. Keyless SSL breaks the encryption “handshake” at the beginning of a Transport Layer Security (TLS) Web session, passing part of the data back to the organization’s data center for encryption. It then negotiates the session with the returned data and acts as a gateway for authenticated sessions—while still being able to screen out malicious traffic such as denial of service attacks.

In an interview with Ars, CloudFlare CEO Matthew Prince said that the technology behind Keyless SSL could help security-minded organizations embrace other cloud services while keeping a tighter rein on them. “If you decide you’re going to use cloud services today, how you set policy across all of these is impossible,” he said. “Now that we can do this, fast forward a year, and we can do things like data loss prevention, intrusion detection… all these things are just bytes in the stream, and we’re already looking at them.”

The development of Keyless SSL began about two years ago, on the heels of a series of massive denial of service attacks against major financial institutions alleged to have been launched from Iran.

the banks weren’t able to use existing content delivery networks and other cloud technology to protect themselves either because of the regulatory environment. “They said, ‘We can’t trust our SSL keys with a third party, because if they lose one of those keys, it’s an event we have to report to the Federal Reserve,’”

Prince and his team had nothing to offer the banks at the time. “These guys need us, but there’s no vault we can ever build that they’ll trust us with their SSL keys,” he said. So CloudFlare system engineers Piotr Sikora and Nick Sullivan started working on ways to allow the banks to hold onto their private keys. The answer was to change what happens with the SSL handshake itself.

Once that’s complete, the CloudFlare data center is able to manage the session with the client, caching the session key and using it to encrypt cached, static content from the organization’s website back to the client. Requests for dynamic data are passed through to the back-end servers on the organization’s server, and responses are passed through (encrypted) to the client Web browser.

The client, Cloudflare’s service, and the backend server all then have the same session key.

CloudFlare’s data centers can spread out requests for a single session across all the servers in each data center through CloudFlare’s key store, an in-memory database of hashed session IDs and tickets.

Prince said that CloudFlare already has a “handful of beta customers, which include some of the top 10 financial institutions,” up and running on Keyless SSL.

Tomi Engdahl says:

The Cost of the “S” In HTTPS

http://yro.slashdot.org/story/14/12/04/1513255/the-cost-of-the-s-in-https

Researchers from CMU, Telefonica, and Politecnico di Torino have presented a paper at ACM CoNEXT that quantifies the cost of the “S” in HTTPS. The study shows that today major players are embracing end-to-end encryption, so that about 50% of web traffic is carried by HTTPS. This is a nice testament to the feasibility of having a fully encrypted web.

The Cost of the “S” in HTTPS

http://www.cs.cmu.edu/~dnaylor/CostOfTheS.pdf

Increased user concern over security and privacy on the Internet has led to widespread adoption of HTTPS, the secure version of HTTP. HTTPS authenticates the communicating end points and provides confidentiality for the ensuing communication. However, as with any security solution, it does not come for free. HTTPS may introduce overhead in terms of infrastructure costs, communication latency, data usage,

and energy consumption.

Motivated by increased awareness of online privacy, the use of HTTPS has increased in recent years. Our measurements reveal a striking ongoing technology shift, indirectly suggesting that the infrastructural cost of HTTPS is decreasing. However, HTTPS can add direct and noticeable protocol-related performance costs, e.g., significantly increasing latency, critical in mobile networks.More interesting, though more difficult to fully under stand, are the indirect consequences of the HTTPS: most in-network services simply cannot function on encrypted data.

For example, we see that the loss of caching could cost providers an extra 2 TB of upstream data per day and could mean increases in energy consumption upwards of 30% for end users in certain cases. Moreover, many other value-added services, like parental controls or virus scanning, are similarly affected, though the extent of the impact of these \lost opportunities” is not clear.

What is clear is this: the S” is here to stay, and the network community needs to work to mitigate the negative repercussions of ubiquitous encryption. To this end, we see two parallel avenues of future work:

first, low-level protocol enhancements to shrink the performance gap, like Google’s ongoing efforts to achieve -RTT” handshakes.

Second, to restore in-network middlebox functionality to HTTPS sessions, we expect to see trusted proxies become an important part of the Internet ecosystem.

Tomi Engdahl says:

Why “Let’s Encrypt” Won’t Make the Internet More Trustworthy

http://www.securityweek.com/why-lets-encrypt-wont-make-internet-more-trustworthy

Internet users have been shaming Lenovo for shipping the SuperFish adware on their flagship ThinkPad laptops. SuperFish was inserting itself into the end-users’ SSL sessions with a certificate whose key has since been discovered (fascinating write-up by @ErrataRob here).

Clearly the certificate used by SuperFish is untrustworthy now. Browsers and defensive systems like Microsoft Windows Defender are marking it as bad. But for most certificates the status isn’t entirely as clear.

Observable Internet encryption is largely made up of SSL servers listening on port 443. On the Internet, approximately 40% of these servers have so-called “self-signed” certificates. A “real” certificate (trusted by your browser) is signed by a trusted third party called a certificate authority: VeriSign, Comodo, GlobalSign, GoDaddy are a few of the tier 1 providers. But a server whose certificate is signed with its own key is called self-signed and is considered untrustworthy. It is sort of strange, isn’t it, that there’s all this software that can perform sophisticated encryption on the Internet, yet much of it is junk.

In mid-2015, the Electronic Frontier Foundation (EFF) will be launching a free, open Certificate Authority called “Let’s Encrypt” with an aim to reducing the stumbling blocks that prevent us from “encrypting the web.”

If Let’s Encrypt succeeds, will self-signed certificates go extinct?

I’m guessing no. I’ll explain why, and why I think that’s not necessarily a bad thing.

There are three reasons certificates are self-signed.

Cost

The first and most quantitative reason for self-signing is because real certificates cost real money. A world-class “extended validation” certificate, perhaps used by a global financial institution and trusted by every browser, might cost $1,000 per year. So it’s no wonder that there are over 10 million sites with self-signed certificates, right? These sites would represent $10 billion in subscriptions per year if they all had real certificates.

there are already bargain certificate vendors who provide no frills, no-questions-asked certificates for $5 per year or even less.

Apathy

One of the most common scenarios resulting in a self-signed certificate is when a new device like a router or webcam gets deployed in a small network. The software in the device supports HTTPS (and not HTTP, otherwise it would set off all kinds of compliance alerts) but it is up to the user to get a real certificate (from a certificate authority) and stick it on there. Unless the user is a security professional of some kind, they probably aren’t going to get a certificate for their device, regardless of the price.

Ignorance

Ask a penetration tester or DAST vendor, and they’ll tell you that a huge number of devices on the Internet were never meant to be there in the first place.

To see how often this really happens, you don’t have to look any further than the infamous SHODAN search engine, which can find weird devices on the Internet based on their HTTP headers.

Will Let’s Encrypt fix all these routers and webcams? No

I predict that Let’s Encrypt probably won’t make a huge impact on the number of self-signed certificates out there, and maybe that’s not a bad thing.

Will Let’s Encrypt Succeed?

Even though Let’s Encrypt has no apparent business model, let’s suppose for a minute that it gets some market share. Over time, vendors might trust its longevity enough to build support into their routers, webcams, and other devices. When their end-user customers set up the device, it would automatically certificate-fetch from Let’s Encrypt. That’s the only world in which self-signed certificates might become a thing of the past. But that world is years away. So like the junk DNA within all of us, I predict that self-signed certificates will live on for at least a few more generations.

Tomi Engdahl says:

‘All browsing activity should be considered private and sensitive’ says US CIO

Stop laughing about the NSA and read this plan to make HTTPS the .gov standard

http://www.theregister.co.uk/2015/03/18/all_browsing_activity_should_be_considered_private_and_sensitive_says_us_cio/

The CIO of the United States has floated a plan to make HTTPS the standard for all .gov websites.

“The majority of Federal websites use HTTP as the primary protocol to communicate over the public internet,” says the plan, which also states that HTTP “create a privacy vulnerability and expose potentially sensitive information about users of unencrypted Federal websites and services.”

“All browsing activity should be considered private and sensitive,” the proposal continues (cough – NSA – cough) before suggesting “An HTTPS-Only standard will eliminate inconsistent, subjective decision-making regarding which content or browsing activity is sensitive in nature, and create a stronger privacy standard government-wide.”

The proposal acknowledges that “The administrative and financial burden of universal HTTPS adoption on all Federal websites includes development time, the financial cost of procuring a certificate and the administrative burden of maintenance over time” and notes that HTTPS can slow servers and sometimes complicate the browsing experience.

The HTTPS-Only Standard

https://https.cio.gov/

The American people expect government websites to be secure and their interactions with those websites to be private. Hypertext Transfer Protocol Secure (HTTPS) offers the strongest privacy protection available for public web connections with today’s internet technology. The use of HTTPS reduces the risk of interception or modification of user interactions with government online services.

This proposed initiative, “The HTTPS-Only Standard,” would require the use of HTTPS on all publicly accessible Federal websites and web services.

Tomi Engdahl says:

Lauren Hockenson / The Next Web:

EFF’s free HTTPS tool ‘Let’s Encrypt’ enters public beta

http://thenextweb.com/dd/2015/12/03/effs-free-https-tool-lets-encrypt-enters-public-beta/

Really, there’s no good reason for devs to not adopt HTTPS certification. The added protection makes you and your users safer, and ensures that you can guarantee that your website doesn’t inject malicious malware, tracking or unwanted ads onto your user experience.

The Electronic Frontier Foundation developed its ‘Let’s Encrypt’ tool to make HTTPS certification faster, easier and free for anyone to use. Developed with sponsorship from Mozilla, the University of Michigan, Cisco, Akamai and others, the tool is now in Public Beta, which means that anyone with a website can set up the automated process to get an HTTPS certificate.

According to the EFF, the process of adopting HTTPS has historically been difficult, and incurs cost on the website’s owner. By creating an automated tool, the barriers to HTTPS no longer exist, and more websites will be safe overall.

Let’s Encrypt Enters Public Beta

https://www.eff.org/deeplinks/2015/12/lets-encrypt-enters-public-beta

So if you run a server, and need certificates to deploy HTTPS, you can run the beta client and get one right now.

We’ve still got a lot to do. This launch is a Public Beta to indicate that, as much as today’s release makes setting up HTTPS easier, we still want to make a lot more improvements towards our ideal of fully automated server setup and renewal. Our roadmap includes may features including options for complete automation of certificate renewal, support for automatic configuration of more kinds of servers (such as Nginx, postfix, exim, or dovecot), and tools to help guide users through the configuration of important Web security features such as HSTS, upgrade-insecure-requests, and OCSP Stapling.

Tomi Engdahl says:

Anti-Hack: Free Automated SSL Certificates

http://hackaday.com/2016/03/20/anti-hack-free-automated-ssl-certificates/

There was a time when getting a secure certificate (at least one that was meaningful) cost a pretty penny. However, a new initiative backed by some major players (like Cisco, Google, Mozilla, and many others) wants to give you a free SSL certificate. One reason they can afford to do this is they have automated the verification process so the cost to provide a certificate is very low.

That hasn’t always been true. Originally, trusted certificates were quite expensive. To understand why, you need to think about what an SSL certificate really means. First, you could always get a free certificate by simply creating one. The price was right, but the results left something to be desired.

A certificate contains a server’s public key, so any key is good enough to encrypt data to the server so that no one else can eavesdrop. What it doesn’t do is prove that the server is who they say they are. A self-generated certificate say “Hey! I’m your bank!” But there’s no proof of that.

To get that proof, you need two things. You need your certificate signed by a certificate authority (CA). You also need the Web browser (or other client) to accept the CA. A savvy user might install special certificates, but for the most utility, you want a CA which browsers already recognize

With the Let’s Encrypt verification, you must have the right to either configure a DNS record or place a file on the server — a process with which webmasters are already familiar.

Tomi Engdahl says:

We Said We’d Be Transparent … WIRED’s First Big HTTPS Snag

https://www.wired.com/2016/05/wired-first-big-https-rollout-snag/

Two weeks ago, WIRED.com tackled a huge security upgrade by starting a HTTPS transition across our site. (What’s HTTPS, and why is it such a big deal? Read all about it here.) The original plan was to launch HTTPS on our Security vertical and then roll it out across all of WIRED.com by May 12. However, only our Transportation vertical is making the switch today. We set ambitious goals for our HTTPS transition, so our revised timeline isn’t a total surprise—but we promised we’d be transparent about the process with our readers. So here are the unique challenges that are making our HTTPS launch take a little longer than we’d hoped.

SEO

Temporary SEO changes on your site are a possible consequence of transitioning to HTTPS.

This type of SEO change is not without precedent. We expect that our site will rebound, so we are giving it more time to recover before committing to HTTPS everywhere.

Mixed Content Issues

As we previously explained, one of the biggest challenges of moving to HTTPS is preparing all of our content to be delivered over secure connections. If a page is loaded over HTTPS, all other assets (like images and Javascript files) must also be loaded over HTTPS. We are seeing a high volume of reports of these “mixed content” issues, or events in which an insecure, HTTP asset is loaded in the context of a secure, HTTPS page. To do our rollout right, we need to ensure that we have fewer mixed content issues—that we are delivering as much of WIRED.com’s content as securely possible.

“When people ask why transitioning to HTTPS is so difficult, this is why: Sites like WIRED.com have a massive amount of data to process and understand.”

We’ve learned a lot by monitoring mixed content issues in the past two weeks.

We’ve been trying to find a suitable metric for gauging progress on handling mixed content issues. So far, we’ve found the ratio of mixed content issues to page views to be helpful. This metric is not affected by spikes in traffic and is thus a good metric to compare day-to-day progress towards our goals of minimizing mixed content issues.

What’s Next?

We promised we would be transparent about the struggles and triumphs of our HTTPS rollout. Today we’re acknowledging a delay—but we’ve got good news too. If you read this article about our editor Alex Davies blacking out in a jet, you’ll see that you are reading it over HTTPS.

Tomi Engdahl says:

YouTube is now 97% encrypted so you can watch your cat videos in peace

http://thenextweb.com/google/2016/08/01/youtube-now-97-encrypted-can-watch-cat-videos-peace/

In a blog post, YouTube today announced that its video service is now 97 percent encrypted.

The company blames not being at 100 percent on some devices being unable to support modern HTTPS, and it hopes to “phase out insecure connections” over time. How long that’ll take remains a mystery, but given that it was at 77 percent in November 2014, full encryption shouldn’t be too far from the future.

HTTPS is important in services like video streaming because it can protect users from hackers planting malware via insecure connections.

YouTube’s road to HTTPS

https://youtube-eng.blogspot.se/2016/08/youtubes-road-to-https.html

We’re proud to announce that in the last two years, we steadily rolled out encryption using HTTPS to 97 percent of YouTube’s traffic.

We’re also proud to be using HTTP Secure Transport Security (HSTS) on youtube.com to cut down on HTTP to HTTPS redirects. This improves both security and latency for end users. Our HSTS lifetime is one year, and we hope to preload this soon in web browsers.

97 percent is pretty good, but why isn’t YouTube at 100 percent? In short, some devices do not fully support modern HTTPS.

In the real world, we know that any non-secure HTTP traffic could be vulnerable to attackers. All websites and apps should be protected with HTTPS

Tomi Engdahl says:

Emily Schechter / Google Online Security Blog:

Chrome will start marking HTTP pages with password and credit card form fields as non-secure in January 2017 — To help users browse the web safely, Chrome indicates connection security with an icon in the address bar. Historically, Chrome has not explicitly labelled HTTP connections as non-secure

Moving towards a more secure web

https://security.googleblog.com/2016/09/moving-towards-more-secure-web.html

To help users browse the web safely, Chrome indicates connection security with an icon in the address bar. Historically, Chrome has not explicitly labelled HTTP connections as non-secure. Beginning in January 2017 (Chrome 56), we’ll mark HTTP sites that transmit passwords or credit cards as non-secure, as part of a long-term plan to mark all HTTP sites as non-secure.

Tomi Engdahl says:

Websites Increasingly Use HTTPS: Google

http://www.securityweek.com/websites-increasingly-use-https-google

Over 60% of Sites Loaded via Chrome Use HTTPS, Says Google

The number of websites that protect traffic using HTTPS has increased considerably in the past months, according to data shared by Google last week.

The tech giant says 64% of websites loaded via Chrome on Android are now protected by HTTPS, up from 42% one year ago. There is also a significant improvement in the case of Mac and Chrome OS – in both cases, 75% of Chrome traffic is protected, up from 60% and 67%, respectively.

Data from Google shows that 67% of Chrome traffic on Windows goes through an HTTPS connection, up from 40% in July 2015 and nearly 50% in July 2016.

Tomi Engdahl says:

The State Of HTTPS in Libraries

http://www.oif.ala.org/oif/?p=11883

With the recent release of tools like Certbot and HTTPSEverywhere and organizations like Let’s Encrypt, it’s becoming easier and easier for non-enterprise web administrators to add SSL certificates to their websites, thus ensuring a more secure connection between the user and server. The question which needs to be answered is, why, with so many tools available are libraries lagging behind in implementing HTTPS on library web servers?

As Tim Willis, HTTPS Evangelist at Google, said in his interview with Wired Magazine: “It’s easy for sites to convince themselves that HTTPS is not worth the hassle. But if you stick with HTTP, you may find that the set of features available to your website will decline over time.” This might have been true 10 years ago, when implementing the certificate required a unique set of skills that most librarians didn’t have, and most public libraries couldn’t afford to outsource. This is no longer the case, yet the mindset hasn’t changed.

The library field is rife with the mindset of “we’ve always done it this way,” which is why we typically lag behind and become late adopters, rather than pioneers we like to pride ourselves as being.

Tomi Engdahl says:

Cloudflare Launches New HTTPS Interception Detection Tools

https://www.securityweek.com/cloudflare-launches-new-https-interception-detection-tools

Security services provider Cloudflare on Monday announced the release of two new tools related to HTTPS interception detection.

Occurring at times when the TLS connection between a browser and a server is not direct, but goes through a proxy or middlebox, HTTPS interception can result in third-parties accessing the transmitted encrypted content.

There are several types of known HTTPS interception, including TLS-terminating forward proxies (to forward and possibly modify traffic), antivirus and corporate proxies (to detect inappropriate content, malware, and data breaches), malicious forward proxies (to insert or exfiltrate data), leaky proxies (any proxy can expose data), and reverse proxies (legitimate, aim to improve performance).

Detecting HTTPS interception, Clourflare says, can help a server identify suspicious or potentially vulnerable clients that connect to the network and notify users on compromised or degraded security.