You wouldn’t write your username and passwords on a postcard and mail it for the world to see, so why are you doing it online? Every time you log in to any service that uses a plain HTTP connection that’s essentially what you’re doing.

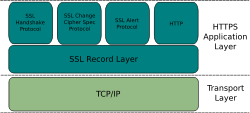

There is a better way, the secure version of HTTP—HTTPS. HTTPS has been around nearly as long as the Web, but it’s primarily used by sites that handle money. HTTPS is the combination of HTTP and TLS. Transport Layer Security (TLS) and its predecessor, Secure Sockets Layer (SSL), are cryptographic protocols that provide communications security over the Internet.

Web security got a shot in the arm last year when the FireSheep network sniffing tool made it easy for anyone to capture your current session’s log-in cookie insecure networks. That prompted a number of large sites to begin offering encrypted versions of their services via HTTPS connections. So the Web is clearly moving toward more HTTPS connections; why not just make everything HTTPS?

HTTPS is more secure, so why isn’t the Web using it? gives some interesting background on HTTPS. There are some practical issues most Web developers are probably aware of.

The real problem is that with HTTPS you lose the ability to cache. For sites that don’t have any reason to encrypt anything (you never log in and just see public information) the overhead and loss of caching that comes with HTTPS just doesn’t make sense. The most content on this site for example don’t have any reason to encrypt anything.

HTTPS SSL initial key exchange also adds to the latency, so HTTPS-only Web would, with today’s technology, be slower. The fact that more and more websites are adding support of HTTPS shows that users do value security over speed, so long as the speed difference is minimal.

The cost of operations for HTTPS site is higher than normal HTTP: you need certificated that cost money and more server resource. There is cost of secure certificates, but obviously that’s not as much of an issue with large Web services that have millions of dollars. The certificate cost can be a showstopper for some smaller low budget sites.

Perhaps the main reason most of us are not using HTTPS to serve our websites is simply that it doesn’t work well with virtual hosts. There is a way to make virtual hosting and HTTPS work together (the TLS Extensions protocol Server Name Indication (SNI)) but so far, it’s only partially implemented.

In the end there is no real technical reason the whole Web couldn’t use HTTPS. There are practical reasons why it isn’t just yet happening today.

62 Comments

Tomi says:

Eureka! Google breakthrough makes SSL less painful

Client-side change cuts latency by 30%

http://www.theregister.co.uk/2011/05/19/google_ssl_breakthrough/

Google researchers say they’ve devised a way to significantly reduce the time it takes websites to establish encrypted connections with end-user browsers

What’s more, the technique known as False Start requires that only simple changes be made to a user’s browser and appears to work with 99 percent of active sites that offer SSL, or secure sockets layer, protection.

Tomi says:

Hackers May Have Nabbed Over 200 SSL Certificates

http://it.slashdot.org/story/11/08/31/2221248/Hackers-May-Have-Nabbed-Over-200-SSL-Certificates

Hackers may have obtained more than 200 digital certificates from a Dutch company after breaking into its network, including ones for Mozilla, Yahoo and the Tor project — a considerably higher number than DigiNotar has acknowledged earlier this week when it said ‘several dozen’ certificates had been acquired by attackers. Among the certificates acquired by the attackers in a mid-July hack of DigiNotar, Van de Looy’s source said.

http://vasco.com/company/press_room/news_archive/2011/news_diginotar_reports_security_incident.aspx

OAKBROOK TERRACE, Illinois and ZURICH, Switzerland – August 30, 2011 – VASCO Data Security International, Inc. (Nasdaq: VDSI; http://www.vasco.com) today comments on DigiNotar’s reported security incident.

http://www.digitoday.fi/tietoturva/2011/08/31/vaara-google-varmenne-livahti-myyjan-silmien-ohi/201112199/66?rss=6

According to this article there has been false certificates created also for Google.com domains…

http://nakedsecurity.sophos.com/2011/08/31/google-blacklists-247-certificates-is-it-related-to-diginotar-hacking-incident/

Google blacklists 247 certificates. Is it related to DigiNotar hacking incident?

After yesterday’s news concerning the fake certificate found in Iran that allowed an attacker to impersonate Google.com, Vasco, the parent company of certificate authority DigiNotar, released a statement explaining what happened.

Tomi says:

The DigiNotar Debacle, and what you should do about it

https://blog.torproject.org/blog/diginotar-debacle-and-what-you-should-do-about-it

Recently it has come to the attention of, well, nearly the entire world that the Dutch Certificate Authority DigiNotar incorrectly issued certificates to a malicious party or parties. Even more recently, it’s come to light that they were apparently compromised months ago or perhaps even in May of 2009 if not earlier.

This is pretty unfortunate, since correctly issuing certificates is exactly the function that a certificate authority (CA) is supposed to perform.

DigiNotar Damage Disclosure

https://blog.torproject.org/blog/diginotar-damage-disclosure

list of 531 entries on the currently known bad DigiNotar related certificates.

https://blog.torproject.org/files/rogue-certs-2011-09-04.csv

https://blog.torproject.org/files/rogue-certs-2011-09-04.xlsx

Tomi says:

A Post Mortem on the Iranian DigiNotar Attack

https://www.eff.org/deeplinks/2011/09/post-mortem-iranian-diginotar-attack

More facts have recently come to light about the compromise of the DigiNotar Certificate Authority, which appears to have enabled Iranian hackers to launch successful man-in-the-middle attacks against hundreds of thousands of Internet users inside and outside of Iran.

Until we have augmented or replaced the CA system with something more secure, all of our fixes to the problem of HTTPS/TLS/SSL insecurity will be band-aids. However, some of these band-aids are important:

The first thing that Internet users should do to protect themselves is to always install browser and operating system updates as quickly as possible when they become available.

Another useful step is to configure your browser to always check for certificate revocation before connecting to HTTPS websites (in Firefox, this setting is Edit→Preferences→Advanced→Encryption→Validation→When an OCSP server connection fails, treat the certificate as invalid).

Firefox users who are particularly concerned (and willing to do more work to protect themselves) may also consider installing Convergence to warn them when certificates they see are different from certificates seen elsewhere in the world and Certificate Patrol to warn them whenever certificates change — legitimately or otherwise.

Users of Google services in particular can choose to enable two-factor authentication, which makes it hard for attackers who steal Google passwords to reuse them later. Any user of Google service with a concrete concern that someone else wants to take over their Google accounts should consider using this protection.

Z says:

In fact, we have every reason to think that the whole CA system is broken, and is just hanging on because nobody is willing to put in the effort needed to replace it.

Many of those CAs are run by reputable firms, whose business models are that they’ll give a certificate to anybody who pays them $100 (or whatever the going rate is this year), and they’ll certify that the payer’s credit card was good, and maybe, just maybe, they’ll only deliver the SSL certificate to an email address or web site that matches the keys they just certified, or do some similarly minimal level of validation. Some of the CAs, of course, require more documentation, charge more money etc..

DigiNotar has been dead since August 30, when Google, Mozilla, and Microsoft all revoked trust in their certificates. No one would ever buy a new DigiNotar certificate, since it would always pop up a scary warning to the user in a web browser. Why bother with buying a certificate from DigiNotar and dealing with the resulting end-user support issues, when you can buy from someone else and not have to deal with the problem?

Check more discussion at http://it.slashdot.org/story/11/09/15/2026223/Certificate-Blunders-May-Mean-the-End-For-DigiNotar

Tomi Engdahl says:

http://www.theregister.co.uk/2011/09/19/beast_exploits_paypal_ssl/

Hackers break SSL encryption used by millions of sites

Beware of BEAST decrypting secret PayPal cookies

Researchers have discovered a serious weakness in virtually all websites protected by the secure sockets layer protocol that allows attackers to silently decrypt data that’s passing between a webserver and an end-user browser.

The vulnerability resides in versions 1.0 and earlier of TLS, or transport layer security, the successor to the secure sockets layer technology that serves as the internet’s foundation of trust. Although versions 1.1 and 1.2 of TLS aren’t susceptible, they remain almost entirely unsupported in browsers and websites alike, making encrypted transactions on PayPal, GMail, and just about every other website vulnerable to eavesdropping by hackers who are able to control the connection between the end user and the website he’s visiting.

The demo will decrypt an authentication cookie used to access a PayPal account, Duong said.

The attack is the latest to expose serious fractures in the system that virtually all online entities use to protect data from being intercepted over insecure networks and to prove their website is authentic rather than an easily counterfeited impostor. Over the past few years, Moxie Marlinspike and other researchers have documented ways of obtaining digital certificates that trick the system into validating sites that can’t be trusted.

“BEAST is like a cryptographic Trojan horse – an attacker slips a bit of JavaScript into your browser, and the JavaScript collaborates with a network sniffer to undermine your HTTPS connection,”

Earlier this month, attackers obtained digital credentials for Google.com and at least a dozen other sites after breaching the security of disgraced certificate authority DigiNotar. The forgeries were then used to spy on people in Iran accessing protected GMail servers.

Although TLS 1.1 has been available since 2006 and isn’t susceptible to BEAST’s chosen plaintext attack, virtually all SSL connections rely on the vulnerable TLS 1.0, according to a recent research from security firm Qualys that analyzed the SSL offerings of the top 1 million internet addresses.

“The problem is people will not improve things unless you give them a good reason, and by a good reason I mean an exploit,”

Tomi Engdahl says:

http://www.vasco.com/company/press_room/news_archive/2011/news_vasco_announces_bankruptcy_filing_by_diginotar_bv.aspx

VASCO Announces Bankruptcy Filing by DigiNotar B.V.

OAKBROOK TERRACE, IL, and ZURICH, Switzerland, September 20, 2011 – VASCO Data Security International, Inc. (Nasdaq: VDSI) (www.vasco.com) today announced that a subsidiary, DigiNotar B.V., a company organized and existing in The Netherlands (“DigiNotar”) filed a voluntary bankruptcy petition under Article 4 of the Dutch Bankruptcy Act in the Haarlem District Court, The Netherlands (the “Court”) on Monday, September 19, 2011 and was declared bankrupt by the Court today.

“We are working to quantify the damages caused by the hacker’s intrusion into DigiNotar’s system and will provide an estimate of the range of losses as soon as possible, “ said Cliff Bown, VASCO’s Executive Vice President and CFO.

Tomi Engdahl says:

Google Prepares Fix To Stop SSL/TLS Attacks

http://it.slashdot.org/story/11/09/22/038227/Google-Prepares-Fix-To-Stop-SSLTLS-Attacks

According to the Register, Google is pushing out a patch to fix the problem. The patch doesn’t involve adding support for TLS 1.1 or 1.2. FTFA: ‘The change introduced into Chrome would counteract these attacks by splitting a message into fragments to reduce the attacker’s control over the plaintext about to be encrypted. By adding unexpected randomness to the process, the new behavior in Chrome is intended to throw BEAST off the scent of the decryption process by feeding it confusing information

http://www.theregister.co.uk/2011/09/21/google_chrome_patch_for_beast/

Tomi Engdahl says:

What an epic fail for TLS. The certification system is broken by design and now apparently the block encryption as well (at least on older most widely used versions).

http://it.slashdot.org/story/11/09/25/0143252/Why-the-BEAST-Doesnt-Threaten-Tor-Users

Why the BEAST Doesn’t Threaten Tor Users

https://blog.torproject.org/blog/tor-and-beast-ssl-attack

Dean Wykoff says:

thx, ill check back later, have bookmarked you for now.

Tomi Engdahl says:

Authenticity of Web pages comes under attack

http://www.usatoday.com/tech/news/story/2011-09-27/webpage-hackers/50575024/1

The keepers of the Internet have become acutely concerned about the Web’s core trustworthiness.

The hacked firms are among more than 650 digital certificate authorities, or CAs, worldwide that ensure that Web pages are the real deal when served up by Microsoft’s Internet Explorer, Firefox, Opera, Apple’s Safari and Google’s Chrome.

Digital certificates enable consumers to submit information that travels through an encrypted connection between the user’s Web browser and a website server. The certificate ensures the Web page can be trusted as authentic. But the unprecedented attacks against CAs show how fragile that trust can be.

Tomi Engdahl says:

http://www.theinquirer.net/inquirer/news/2113108/mozilla-blocking-java-mitigate-beast-attack?WT.rss_f=Home&WT.rss_a=Mozilla+thinks+of+blocking+Java+to+mitigate+BEAST+attack

Mozilla thinks of blocking Java to mitigate BEAST attack

FIREFOX DEVELOPERS are considering blocking the Java plug-in in order to prevent a dangerous same origin policy bypass from working. The bug was exploited by security researchers Thai Duong and Juliano Rizzo in their recently disclosed attack against SSL/TLS.

The Browser Exploit Against SSL/TLS (BEAST) leverages a decade-old vulnerability in SSL and TLS 1.0 to decrypt and steal protected session cookies.

Then, in order for this code to interfere with the targeted HTTPS web site, restrictions enforced by the browser’s same origin policy (SOP) need to be bypassed. Duong and Rizzo achieved this by exploiting a vulnerability in Oracle’s Java plug-in.

“I recommend that we blocklist all versions of the Java Plugin,” Firefox developer Brian Smith proposed on Mozilla’s bug tracking platform. “My understanding is that Oracle may or may not be aware of the details of the same-origin exploit. As of now, we have no ETA for a fix for the Java plugin,” he added.

Tomi Engdahl says:

Father of SSL Talks Serious Security Turkey

http://it.slashdot.org/story/11/10/11/1743213/Father-of-SSL-Talks-Serious-Security-Turkey

Father of SSL says despite attacks, the security linchpin has lots of life left

http://www.networkworld.com/news/2011/101111-elgamal-251806.html?hpg1=bn

With all the noise about SSL/TLS it’s easy to think that something is irreparably damaged and perhaps it’s time to look for something else.

But despite the exploit — Browser Exploit Against SSL/TLS (BEAST) — and the failures of certificate authorities such as Comodo and DigiNotar that are supposed to authenticate users, the protocol has a lot of life left in it if properly upgraded as it becomes necessary, says Taher Elgamal, CTO of Axway and one of the creators of SSL.

Tomi Engdahl says:

New Attack Tool Exploits SSL Renegotiation Bug

http://it.slashdot.org/story/11/10/26/0327251/new-attack-tool-exploits-ssl-renegotiation-bug

“A group of researchers has released a tool that they say implements a denial-of-service attack against SSL servers by triggering a huge number of SSL renegotiations, eventually consuming all of the server’s resources and making it unavailable. The tool exploits a widely known issue with the way that SSL connections work.”

Considering Facebook API now requires SSL/TLS for Facebook Apps, many servers had to turn on HTTPS just for this. It’s just a matter of time until we see this widely exploited.

German hacker outfit demos SSL denial of service exploit

http://www.theinquirer.net/inquirer/news/2119948/german-hacker-outfit-demos-ssl-denial-service-exploit?WT.rss_f=Home&WT.rss_a=German+hacker+outfit+demos+SSL+denial+of+service+exploit+

GERMAN HACKER GROUP The Hacker’s Choice (THC) has released a Secure Sockets Layer (SSL) denial of service (DoS) tool that claims to require just a single machine and minimal bandwidth.

THC’s SSL-DoS tool is a proof of concept that the group claims will “disclose fishy security in SSL”.

THC cites “complexity is the enemy of security” as a mantra, and if the group’s claim that few services actually use SSL renegotiation really is true, it’s rather surprising that so few system administrators have bothered to turn the service off.

Also other comment on different SSL related issue:

Concerns Over Google Modifying SSL Behavior

http://yro.slashdot.org/story/11/10/25/1547219/concerns-over-google-modifying-ssl-behavior

“Google is handling SSL search queries on https://www.google.com/ in a manner significantly different than the standard, expected SSL end-to-end behavior — specifically relating to referer query data. These changes give the potential appearance of favoring sites that buy ads from Google. Regardless of the actual intentions, I do not believe that this appearance is in the best interests of Google in the long run.”

Google Modifies SSL Behavior — and the Results Are Troubling

http://lauren.vortex.com/archive/000906.html

Normally, we would expect an ordinary destination site using SSL to receive the referer query data as per standard SSL end-to-end behavior. But apparently Google is now blocking this data.

Tomi Engdahl says:

There has been another attack to certificate authority.

Gemnet (another Dutch certificate authority) has been attached according to http://webwereld.nl/nieuws/108815/weer-certificatenleverancier-overheid-gehackt.html

“The website of KPN subsidiary Gemnet, provider of PKI Certificates Government, appears to be hacked. A management page gave access to documents and database. The leak is sealed.”

Security trends for 2012 « Tomi Engdahl’s ePanorama blog says:

[...] of the server certificates will face more and more problems. We can see more certificate authority bankruptcies due cyber attacks to them. Certificate attacks that have focused on the PC Web browsers, are now proven to be effective [...]

Tomi Engdahl says:

VeriSign admits multiple hacks in 2010, keeps details under wraps

http://www.computerworld.com/s/article/9223936/VeriSign_admits_multiple_hacks_in_2010_keeps_details_under_wraps?taxonomyId=17&pageNumber=2

If criminals did steal one or more SSL certificates, they could use them to conduct “man-in-the-middle” attacks, tricking users into thinking they were at a legitimate site when in fact their communications were being secretly intercepted. Or they could use them to “secure” fake websites that seem to be legitimate copies of popular Web services, using the bogus domains to steal information or plant malware.

Symantec, which began issuing SSL certificates under the VeriSign brand in the second half of 2010, denied that any hack had taken place on its watch.

“The Trust Services (SSL), User Authentication (VIP) and other production systems acquired by Symantec [from VeriSign] were not compromised by the corporate network security breach mentioned in the VeriSign, Inc. quarterly filing,” a Symantec spokeswoman said today.

According to the SEC filing, company executives were not told of the 2010 attacks until September 2011.

The SEC issued guidelines in October 2011 that pushed companies to report hacking attacks.

Tomi Engdahl says:

HTTPS Everywhere

https://www.eff.org/https-everywhere

HTTPS Everywhere is a Firefox extension produced as a collaboration between The Tor Project and the Electronic Frontier Foundation. It encrypts your communications with a number of major websites.

Many sites on the web offer some limited support for encryption over HTTPS, but make it difficult to use. For instance, they may default to unencrypted HTTP, or fill encrypted pages with links that go back to the unencrypted site. The HTTPS Everywhere extension fixes these problems by rewriting all requests to these sites to HTTPS.

HTTPS Everywhere can protect you only when you’re using sites that support HTTPS and for which HTTPS Everywhere includes rules.

Tomi Engdahl says:

Google to strip Chrome of SSL revocation checking

http://arstechnica.com/business/guides/2012/02/google-strips-chrome-of-ssl-revocation-checking.ars

The browser will stop querying CRL, or certificate revocation lists, and databases that rely on OCSP, or online certificate status protocol, Google researcher Adam Langley said in a blog post published on Sunday. He said the services, which browsers are supposed to query before trusting a credential for an SSL-protected address, don’t make end users safer because Chrome and most other browsers establish the connection even when the services aren’t able to ensure a certificate hasn’t been tampered with.

“So soft-fail revocation checks are like a seat-belt that snaps when you crash,” Langley wrote. “Even though it works 99% of the time, it’s worthless because it only works when you don’t need it.”

SSL critics have long complained that the revocation checks are mostly useless.

Chrome will instead rely on its automatic update mechanism to maintain a list of certificates that have been revoked for security reasons.

Tomi Engdahl says:

Marlinspike asks browser vendors to back SSL-validator

‘Convergence’ open source dev needs vendors to balance the load

http://www.theregister.co.uk/2012/02/08/convergence/

Moxie Marlinspike is encouraging browser developers to support an experimental project to shake up the security of website authentication by moving beyond blind faith in secure sockets layer (SSL) credentials

The Convergence open-source project is designed to address at least some of the main shortcomings that underpin trust in e-commerce and other vital services, such as webmail. The technology, available as a browser add-on for Firefox, allows users to query notary servers – which they can pick – to make sure the SSL certificate served up by any particular site is kosher.

About 650 organisations are authorised to sign certificates.

Hackers able to break into the systems of any of these certificate authorities would be able to issue counterfeit credentials, subverting the whole system of trust.

Convergence, rather than relying on the public key infrastructure that ties together the current SSL system, utilises a loose confederation of notaries that independently vouch for the integrity of a given SSL certificate.

Around 50 organisations have signed up to become notaries, including privacy advocates such as the EFF and technology firms including Qualys

Convergence Beta

http://convergence.io/

Tomi Engdahl says:

Flaw Found in an Online Encryption Method

http://www.nytimes.com/2012/02/15/technology/researchers-find-flaw-in-an-online-encryption-method.html

The flaw — which involves a small but measurable number of cases — has to do with the way the system generates random numbers, which are used to make it practically impossible for an attacker to unscramble digital messages.

The potential danger of the flaw is that even though the number of users affected by the flaw may be small, confidence in the security of Web transactions is reduced, the authors said.

For the system to provide security, however, it is essential that the secret prime numbers be generated randomly. The researchers discovered that in a small but significant number of cases, the random number generation system failed to work correctly.

The modern world’s online commerce system rests entirely on the secrecy afforded by the public key cryptographic infrastructure.

“This comes as an unwelcome warning that underscores the difficulty of key generation in the real world,” said James P. Hughes, an independent Silicon Valley cryptanalyst

The researchers examined public databases of 7.1 million public keys used to secure e-mail messages, online banking transactions and other secure data exchanges. They said they “stumbled upon” almost 27,000 different keys that offer no security.

Tomi Engdahl says:

99.8% Security For Real-World Public Keys

http://it.slashdot.org/story/12/02/14/2322213/998-security-for-real-world-public-keys

If you grab all the public keys you can find on the net, then you might expect to uncover a few duds — but would you believe that 2 out of every 1000 RSA keys is bad? This is one of the interesting findings in the paper ‘Ron was wrong, Whit is right’

Tomi Engdahl says:

‘Predictably random’ public keys can be cracked – crypto boffins

Battling researchers argue over whether you should panic

http://www.theregister.co.uk/2012/02/16/crypto_security/

Cryptography researchers have discovered flaws in the key generation that underpins the security of important cryptography protocols, including SSL.

Two teams of researchers working on the problem have identified the same weak key-generation problems. However, the two teams differ in their assessment of how widespread the problem is – and crucially which systems are affected. One group reckons the problem affects web servers while the second reckons it is almost completely confined to embedded devices.

EFF group: It could lead to server-impersonation attacks

An audit of the public keys used to protect HTTPS connections, based on digital certificate data from the Electronic Frontier Foundation’s SSL Observatory project, found that tens of thousands of cryptography keys offer “effectively no security” due to weak random-number generation algorithms.

Poor random-number generation algorithms led to shared prime factors in key generation. As a result, keys generated using the RSA 1024-bit modulus, the worst affected scheme, were only 99.8 per cent secure.

Michigan group: It just affects embedded devices

Michigan group: It just affects embedded devices

Another set of security researchers working on the same problem were able to remotely compromise a higher percentage: about 0.4 per cent of all the public keys used for SSL web site security. They said: “The keys we were able to compromise were generated incorrectly – using predictable ‘random’ numbers that were sometimes repeated.”

The Michigan group reckons the problem largely affects network devices, rather than web servers, and is certainly no reason to avoid taking advantage of the cost and convenience benefits brought by e-commerce,

There’s no need to panic as this problem mainly affects various kinds of embedded devices such as routers and VPN devices, not full-blown web servers.

4.1% of the SSL keys in our dataset, were generated with poor entropy.

Nguyen Ky Son says:

Ban chia se that tuyet voi, toi dang can tim hieu cai nay. Cam on tac gia da nhiet tinh viet! Mong rang se con duoc doc nhieu bai viet hay nua!

Tomi Engdahl says:

EFF’s HTTPS Everywhere Detects and Warns About Cryptographic Vulnerabilities

http://yro.slashdot.org/story/12/02/29/2011247/effs-https-everywhere-detects-and-warns-about-cryptographic-vulnerabilities

EFF has released version 2 of the HTTPS Everywhere browser extension for Firefox, and a beta version for Chrome. The Firefox release has a major new feature called the Decentralized SSL Observatory. This optional setting submits anonymous copies of the HTTPS certificates that your browser sees to their Observatory database allowing them to detect attacks against the web’s cryptographic infrastructure. It also allows us to send real-time warnings to users who are affected by cryptographic vulnerabilities or man-in-the-middle attacks

modest wedding dresses las vegas says:

I conceive this internet site has got some rattling good info for everyone :D. “Believe those who are seeking the truth doubt those who find it.” by Andre Gide.

Tomi Engdahl says:

Most of the Internet’s Top 200,000 HTTPS Websites Are Insecure, Trustworthy Internet Movement Says

http://www.pcworld.com/article/254546/most_of_the_internets_top_200000_https_websites_are_insecure_trustworthy_internet_movement_says.html

Ninety percent of the Internet’s top 200,000 HTTPS-enabled websites are vulnerable to known types of SSL (Secure Sockets Layer) attack, according to a report released Thursday by the Trustworthy Internet Movement (TIM), a nonprofit organization dedicated to solving Internet security, privacy and reliability problems.

Half of the almost 200,000 websites in Alexa’s top one million that support HTTPS received an A for the quality of their configurations. This means that they use a combination of modern protocols, strong ciphers and long keys.

Despite this, only 10 percent of the scanned websites were deemed truly secure. Seventy-five percent — around 148,000 — were found to be vulnerable to an attack known as BEAST, which can be used to decrypt authentication tokens and cookies from HTTPS requests.

The BEAST attack was demonstrated by security researchers Juliano Rizzo and Thai Duong at the ekoparty security conference in Buenos Aires, Argentina, in September 2011. It is a practical implementation of an older theoretical attack and affects SSL/TLS block ciphers, like AES or Triple-DES.

The attack was fixed in version 1.1 of the Transport Layer Security (TLS) protocol, but a lot of servers continue to support older and vulnerable protocols, like SSL 3.0, for backward compatibility reasons. Such servers are vulnerable to so-called SSL downgrade attacks in which they can be tricked to use vulnerable versions of SSL/TLS even when the targeted clients support secure versions.

SSL Pulse scans also revealed that over 13 percent of the 200,000 HTTPS-enabled websites support the insecure renegotiation of SSL connections. This can lead to man-in-the-middle attacks that compromise SSL-protected communications between users and the vulnerable servers.

“For your average Web site — which will not have anything of substantial value — the risk is probably very small,” Ristic said. “However, for sites that either have a very large number of users that can be exploited in some way, or high-value sites (e.g., financial institutions), the risks are potentially very big.”

Fixing the insecure renegotiation vulnerability is fairly easy and only requires applying a patch, Ristic said.

TIM plans to perform new SSL Pulse scans and to update the statistics on a monthly basis in order to track what progress websites are making with their SSL implementations.

Kendall Shrigley says:

The federal government has said it’ll restrict highest power employ by companies during the hotter months in an effort to prevent further blackouts

Warner Scherrer says:

This can be a really good study for me, Must admit that you are certainly one of the very best bloggers I ever noticed:)Thanks for posting this informative write-up!!

Tomi Engdahl says:

Thank you for your feedback.

Tomi Engdahl says:

SSL Pulse Project Finds Just 10% of SSL Sites Actually Secure

http://it.slashdot.org/story/12/04/28/0321240/ssl-pulse-project-finds-just-10-of-ssl-sites-actually-secure

“A new project that was setup to monitor the quality and strength of the SSL implementations on top sites across the Internet found that 75 percent of them are vulnerable to the BEAST SSL attack and that just 10 percent of the sites surveyed should be considered secure. The SSL Pulse project, set up by the Trustworthy Internet Movement, looks at several components of each site’s SSL implementation to determine how secure the site actually is.”

“The data that the SSL Pulse project has gathered thus far shows that the vast majority of the 200,000 sites the project is surveying need some serious help in fixing their SSL implementations.”

UK Universities Caught With Weak SSL Security

http://news.slashdot.org/story/12/06/28/0250210/uk-universities-caught-with-weak-ssl-security

“UK Universities have been found using weak SSL security implementations on their websites. An investigation by TechWeekEurope found 17 of the top 50 British universities scored C or worse on the SSL Labs tool”

Tomi Engdahl says:

Last year’s BEAST attack was mitigated by reconfiguring web servers to use the RC4 cipher-suite rather than AES. CRIME enables miscreants to run in man-in-the-middle-style attacks and is not dependant on cipher-suites.

Source: http://www.theregister.co.uk/2012/09/07/https_sesh_hijack_attack/

Tomi Engdahl says:

SSL BEASTie boys develop follow-up ‘CRIME’ web attack

http://www.theregister.co.uk/2012/09/07/https_sesh_hijack_attack/

The security researchers who developed the infamous BEAST attack that broke SSL/TLS encryption are cooking up a new assault on the same crucial protocols.

The new attack is capable of intercepting these HTTPS connections and hijacking them.

The researchers warn that all versions of TLS/SSL – including TLS 1.2 which was resistant to their earlier BEAST (Browser Exploit Against SSL/TLS) technique – are at risk.

“By running JavaScript code in the browser of the victim and sniffing HTTPS traffic, we can decrypt session cookies,” Rizzo told Threatpost. “We don’t need to use any browser plugin and we use JavaScript to make the attack faster, but in theory we could do it with static HTML.”

Chrome and Firefox are both vulnerable to CRIME, but developers at Google and Mozilla have been given a heads up on the problem and are likely to have patches available within a few weeks.

Tomi Engdahl says:

Microsoft: As of October, 1024-Bit Certs Are the New Minimum

http://it.slashdot.org/story/12/09/09/2324259/microsoft-as-of-october-1024-bit-certs-are-the-new-minimum

“That warning comes as Microsoft prepares to release an automatic security update for Windows on Oct. 9, 2012, that will make longer key lengths mandatory for all digital certificates that touch Windows systems. … Internet Explorer won’t be able to access any website secured using an RSA digital certificate with a key length of less than 1,024 bits.”

Tomi Engdahl says:

Crack in Internet’s foundation of trust allows HTTPS session hijacking

Attack dubbed CRIME breaks crypto used to prevent snooping of sensitive data.

http://arstechnica.com/security/2012/09/crime-hijacks-https-sessions/

Researchers have identified a security weakness that allows them hijack web browser sessions even when they’re protected by the HTTPS encryption that banks and ecommerce sites use to prevent snooping on sensitive transactions.

The technique exploits web sessions protected by the Secure Sockets Layer and Transport Layer Security protocols when they use one of two data-compression schemes designed to reduce network congestion or the time it takes for webpages to load. Short for Compression Ratio Info-leak Made Easy, CRIME works only when both the browser and server support TLS compression or SPDY, an open networking protocol used by both Google and Twitter.

CRIME is the latest black eye for the widely used encryption protocols

Representatives from Google, Mozilla, and Microsoft said their companies’ browsers weren’t vulnerable to CRIME attacks.

smartphone browsers and a myriad of other applications that rely on TLS are believed to remain vulnerable.

Even when a browser is vulnerable, an HTTPS session can only be hijacked when one of those browsers is used to connect to a site that supports SPDY or TLS compression.

“I don’t think anyone realized that this enables an attack on HTTP over TLS, or that an attacker could learn the value of secret cookies sent over a TLS-encrypted connection,”

Tomi Engdahl says:

The perfect CRIME? New HTTPS web hijack attack explained

BEASTie boys reveal ingenious cookie-gobbling attack

http://www.theregister.co.uk/2012/09/14/crime_tls_attack/

More details have emerged of a new attack that allows hackers to hijack encrypted web traffic – such as online banking and shopping protected by HTTPS connections.

The so-called CRIME technique lures a vulnerable web browser into leaking an authentication cookie created when a user starts a secure session with a website.

The cookie is deduced by tricking the browser into sending compressed encrypted requests for files to a HTTPS website and exploiting information inadvertently leaked during the process. During the attack, the encrypted requests – each of which contains the cookie – are slightly modified by a block of malicious JavaScript code, and the change in the size of the compressed message is used to determine the cookie’s contents character by character.

CRIME (Compression Ratio Info-leak Made Easy) was created by security researchers Juliano Rizzo and Thai Duong,

Punters using web browsers that implement either TLS or SPDY compression are potentially at risk

The attack has been demonstrated against several sites including Dropbox, Github and Stripe.

Tomi Engdahl says:

Crime-attack:

Vulnerable TLS compression is supported on many websites, but only the browsers Chrome and Firefox use it. It is the vulnerability of the disabled in the latest versions, so users should make sure that you have the latest version of the browser.

Cert-fi vulnerable lists :

TLS 1.2 and previous versions

Chrome prior to version 21.0.1180.89

Firefox prior to version 15.0.1

GnuTLS (TLS compression is not enabled by default)

Apache 2.x series (ModSSL)

Web services administrators may want to turn TLS compression support off.

Source: http://www.tietokone.fi/uutiset/nettiliikenteen_salausmenetelmaa_vastaan_hyokataan

Media Manchester says:

THanks so much for your comments………Web Design.

Tomi Engdahl says:

HTTPS Everywhere plugin from EFF protects 1,500 more sites

The browser extension makes it easier to connect to encrypted websites.

http://arstechnica.com/security/2012/10/https-everywhere-plugin-from-eff-protects-1500-more-sites/

Members of the Electronic Frontier Foundation have updated their popular HTTPS Everywhere browser plugin to offer automatic Web encryption to an additional 1,500 sites, twice as many as previously offered.

A previous update to HTTPS Everywhere introduced an optional feature known as the Decentralized SSL Observatory. It detects and warns about possible man-in-the-middle attacks on websites a user is visiting. It works by sending a copy of the site’s SSL certificate to the EFF’s SSL Observatory. When EFF detects anomalies, it sends a warning to affected end users.

Tomi Engdahl says:

One year on, SSL servers STILL cower before the BEAST

70% of sites still vulnerable to cookie monster

http://www.theregister.co.uk/2012/10/18/ssl_security_survey/

The latest monthly survey by the SSL Labs project has discovered that many SSL sites remain vulnerable to the BEAST attack, more than a year after the underlying vulnerability was demonstrated by security researchers.

BEAST is short for Browser Exploit Against SSL/TLS. The stealthy piece of JavaScript works with a network sniffer to decrypt the encrypted cookies that a targeted website uses to grant access to restricted user accounts.

The root cause of the BEAST attack, first outlined by security researchers in September 2011, is a vulnerable ciphersuite on servers. The dynamics of the CRIME attack are more complex but capable of being thwarted at the browser or quashed on a properly updated and configured server.

Tomi Engdahl says:

Add self-signed certs to Chrome

http://www.bit-integrity.com/2012/10/while-chrome-is-excellent-browser-there.html

While Chrome is an excellent browser, there isn’t a quick and easy method to convince it to stop freaking out over self-signed or custom ssl certificates. For the majority of users this is probably a good thing, however for sys-admins or developer types there has to be a better way. This bash script takes the hassle out of importing certificates to make Chrome be quiet

Tomi Engdahl says:

Browser vendors rush to block fake google.com site cert

http://www.theregister.co.uk/2013/01/04/turkish_fake_google_site_certificate/

Google and other browser vendors have taken steps to block an unauthorized digital certificate for the ” *.google.com” domain that fraudsters could have used to impersonate the search giant’s online services.

According to a blog post by software engineer Adam Langley, Google’s Chrome team first discovered a site using the fraudulent certificate on Christmas Eve. Upon investigation, they were able to trace the phony credential back to Turkish certificate authority Turktrust, which quickly owned up to the problem.

It seems that in August 2011, Turktrust mistakenly issued two intermediate certificates to one of its customers, instead of the ordinary SSL certificates it should have issued.

Tomi Engdahl says:

Nokia Admits Decrypting User Data But Denies Man-in-the-Middle Attacks

http://www.techweekeurope.co.uk/news/nokia-decrypting-traffic-man-in-the-middle-attacks-103799

Nokia says it does decrypt some customer information over HTTPS traffic, but isn’t spying on people

Nokia has rejected claims it might be spying on users’ encrypted Internet traffic, but admitted it is intercepting and temporarily decrypting HTTPS connections for the benefit of customers.

A security professional alleged Nokia was carrying out so-called man-in-the-middle attacks on its own users. Gaurang Pandya, currently infrastructure security architect at Unisys Global Services India, said in December he saw traffic being diverted from his Nokia Asha phone through to Nokia-owned proxy servers.

Pandya wanted to know if SSL-protected traffic was being diverted through Nokia servers too. Yesterday, in a blog post, Pandya said Nokia was intercepting HTTPS traffic and could have been snooping on users’ content, as he had determined by looking at DNS requests and SSL certificates using Nokia’s mobile browser.

Nokia said it was diverting user connections through its own proxy servers as part of the traffic compression feature of its browser, designed to make services speedier. It was not looking at any encrypted content, even though it did temporarily decrypt some information. This could still be defined as a man-in-the-middle attack, although Nokia says no data is being viewed by its staff.

“The compression that occurs within the Nokia Xpress Browser means that users can get faster web browsing and more value out of their data plans,” a spokesperson said, in an email sent to TechWeekEurope.

quibids bid tracker says:

This website just produced my week! I have been looking close to for info on this. I’m glad now that I ran across this webpage. Woohoo!

Tomi Engdahl says:

Google to beef up SSL encryption keys

Will double key length to 2048-bit by the end of 2013

http://www.theinquirer.net/inquirer/news/2270624/google-to-beef-up-ssl-encryption-keys

SOFTWARE HOUSE Google has announced plans to upgrade its Secure Sockets Layer (SSL) certificates to 2048-bit keys by the end of 2013 to strengthen its SSL implementation.

“We’re also going to change the root certificate that signs all of our SSL certificates because it has a 1024-bit key,” McHenry said.

“Most client software won’t have any problems with either of these changes, but we know that some configurations will require some extra steps to avoid complications. This is more often true of client software embedded in devices such as certain types of phones, printers, set-top boxes, gaming consoles, and cameras.”

F-secure’s security researcher Sean Sullivan advised, “By updating its SSL standards, Google will make it easier to spot forged certificates.

Tomi says:

HTTP 2.0 May Be SSL-Only

http://it.slashdot.org/story/13/11/13/1938207/http-20-may-be-ssl-only

“In an email to the HTTP working group, Mark Nottingham laid out the three top proposals about how HTTP 2.0 will handle encryption. The frontrunner right now is this: ‘HTTP/2 to only be used with https:// URIs on the “open” Internet. http:// URIs would continue to use HTTP/1.’ This isn’t set in stone yet,”

“The big goal here is to increase the use of encryption on the open web.”

Comments:

People think that adding encryption to something makes it more secure. No, it does not. Encryption is worthless without secure key exchange, and no matter how you dress it up, our existing SSL infrastructure doesn’t cut it. It never has. It was built insecure. All you’re doing is adding a middle man, the certificate authority, that somehow you’re supposed to blindly trust to never, not even once, fuck it up and issue a certificate that is later used to fuck you with. http://www.microsoft.com can be signed by any of the over one hundred certificate authorities in your browser. The SSL protocol doesn’t tell the browser to check all hundred plus for duplicates; it just goes to the one that signed it and asks: Are you valid?

The CA system is broken. It is so broken it needs to be put on a giant thousand mile wide sign and hoisted int orbit so it can be seen at night saying: “This system is fucked.” Mandating a fucked system isn’t improving security!

Show me where and how you plan on making key exchange secure over a badly compromised and inherently insecure medium, aka the internet, using the internet. It can’t be done. No matter how you cut it, you need another medium through which to do the initial key exchange. And everything about SSL comes down to one simple question: Who do you trust? And who does the person you trusted, in turn, trust? Because that’s all SSL is: It’s a trust chain. And chains are only as strong as the weakest link.

Break the chain, people. Let the browser user take control over who, how, and when, to trust.

If everything is to go SSL, we now need widespread “man-in-the-middle” intercept detection. This requires a few things:

SSL certs need to be published publicly and widely, so tampering will be detected.

Any CA issuing a bogus or wildcard cert needs to be downgraded immediately, even if it invalidates every cert they’ve issued. Browsers should be equipped to raise warning messages when this happens.

MITM detection needs to be implemented within the protocol. This is tricky, but possible.

Wendolyn Conorich says:

Make a video

Tomi Engdahl says:

How the NSA, and your boss, can intercept and break SSL

http://www.zdnet.com/how-the-nsa-and-your-boss-can-intercept-and-break-ssl-7000016573/

Summary: Most people believe that SSL is the gold-standard of Internet security. It is good, but SSL communications can be intercepted and broken. Here’s how.

Tomi Engdahl says:

Explainer: How Google’s New SSL / HTTPS Ranking Factor Works

New facts on the Google HTTPS ranking signal.

http://searchengineland.com/explainer-googles-new-ssl-https-ranking-factor-works-200492

Tomi Engdahl says:

Why Google is Hurrying the Web to Kill SHA-1

https://konklone.com/post/why-google-is-hurrying-the-web-to-kill-sha-1

Most of the secure web is using an insecure algorithm, and Google’s just declared it to be a slow-motion emergency.

Something like 90% of websites that use SSL encryption — [green lock] — use an algorithm called SHA-1 to protect themselves from being impersonated.

Unfortunately, SHA-1 is dangerously weak, and has been for a long time. It gets weaker every year, but remains widely used on the internet. Its replacement, SHA-2, is strong and supported just about everywhere.

By rolling out a staged set of warnings, Google is declaring a slow-motion emergency, and hurrying people to update their websites before things get worse. That’s a good thing, because SHA-1 has got to go, and no one else is taking it as seriously as it deserves.

As importantly, the security community needs to make changing certificates a lot less painful, because security upgrades to the web shouldn’t have to feel like an emergenc

An attack on SHA-1 feels plenty viable to me

In 2005, cryptographers proved that SHA-1 could be cracked 2,000 times faster than predicted. It would still be hard and expensive — but since computers always get faster and cheaper, it was time for the internet to stop using SHA-1.

Then the internet just kept using SHA-1. In 2012, Jesse Walker wrote an estimate, reprinted by Bruce Schneier, of the cost to forge a SHA-1 certificate. The estimate uses Amazon Web Services pricing and Moore’s Law as a baseline.

Walker’s estimate suggested then that a SHA-1 collision would cost $2M in 2012, $700K in 2015, $173K in 2018, and $43K in 2021. Based on these numbers, Schneier suggested that an “organized crime syndicate” would be able to forge a certificate in 2018, and that a university could do it in 2021.

In 2012, researchers uncovered a malware known as Flame.

Flame relied on an SSL certificate forged by engineering a collision with SHA-1′s predecessor, MD5.

And it’s a funny story about MD5, because, like SHA-1, it was discovered to be breakably weak a very long time ago, and then, like SHA-1, it took a horrifying number of years to rid the internet of it.

What browsers are doing

Microsoft was the first to announce a deprecation plan for SHA-1, where Windows and Internet Explorer will distrust SHA-1 certificates after 2016. Mozilla has decided on the same thing. Neither Microsoft nor Mozilla have indicated they plan to change their user interface in the interim to suggest to the user that there’s a problem.

Google, on the other hand, recently dropped a truth bomb by announcing that Chrome would show warnings to the user right away, because SHA-1 is just too weak

To help with the transition, I’ve built a small website at shaaaaaaaaaaaaa.com that checks whether your site is using SHA-1 and needs to be updated

Requesting a new certificate is usually very simple. You’ll need to generate a new certificate request that asks your CA to use SHA-2, using the -sha256 flag.

openssl req -new -sha256 -key your-private.key -out your-domain.csr

SHA-1 roots: You don’t need to worry about SHA-1 root certificates that ship with browsers, because their integrity is verified without using a digital signature.